Finishing the initial version of your site is only the first step on your journey as a website owner. Now that you have a website, tracking its vital statistics is crucial for success.

It’s easy to overlook the trivial things that negatively impact your website’s professionalism, security, Google rankings, and ultimately the revenue you make from it.

Luckily, there are a variety of tools and services that take the grunt work out of managing a website. When you maintain these eight essential elements of any successful website, your site’s odds of being successful will increase tremendously.

If your website is suddenly getting a lot more (or fewer) visitors, then it’s good to know when it started happening and why so that you can either capitalize on its newfound popularity or refresh your SEO strategy. It’s also useful to see which devices your users browse your site with, which sites they came from, and where they’re located.

User analytics packages (e.g., Google Analytics or Cloudflare Web Analytics) allow you to track a variety of statistics about the people who use your website, and what they do on it. Google Analytics can even send you an email alert if certain conditions are met (e.g., a sudden spike in traffic).

If you’re getting a lot of hits to your homepage but only a few purchases, then you can also see how much time users are spending on your site, and how many of them make it to each step in the process of buying something.

Links you make to other sites could suddenly stop working at any time if a website that you linked to is revamped, or a domain is sold to someone else. As bad as 404 errors are for your professionalism, the worst situation is when a website changes hands and redirects to something like a phishing site, or a parked domain full of ads.

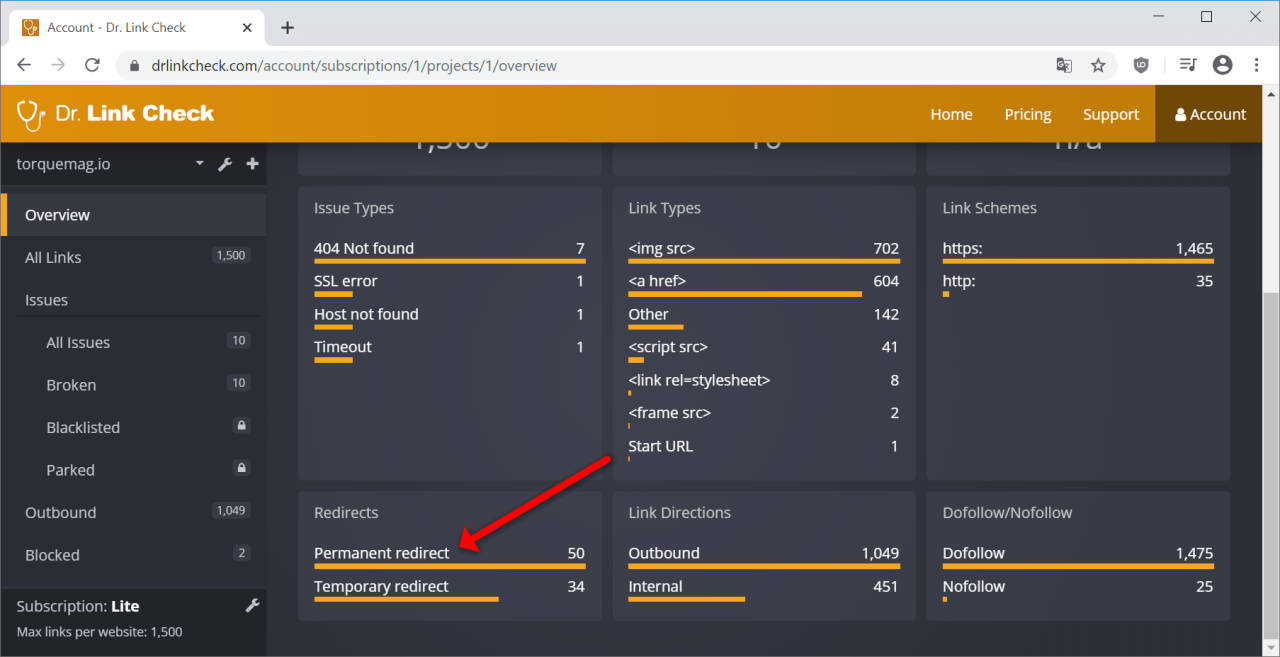

To avoid having to manually check every link all the time, Dr. Link Check makes sure that all the links on your entire site (including images and external stylesheets) load correctly, have valid SSL certificates, aren’t on a blacklist of malware and phishing sites, and haven’t been parked.

After crawling every link across your whole website, Dr. Link Check prepares a searchable report and lets you download the data as a CSV file to do your own analytics.

A website can’t be successful if it’s down, so services like Uptime Robot and Pingdom check your website’s status every few minutes to make sure it hasn’t encountered an outage. As soon as it does, these services will alert you via an SMS message, email, or various other contact methods, so you can get it working again as quickly as possible.

Uptime Robot can also check protocols other than HTTP/S and generate status pages. Pingdom includes a full performance monitoring and analytics solution, as well. Each will load your site from multiple locations to determine if an outage is only affecting people in a certain geographical area.

Nothing erodes user trust and confidence quite like a security breach, so it’s of the utmost importance to avoid them entirely. Even if you follow good development practices and keep all your software up to date, it’s still possible to mess up somewhere, leaving an opportunity for a hacker to sabotage your business.

While automated tools aren’t a perfect substitute for a professional security audit, tools including Website Vulnerability Scanner, Mozilla Observatory, and WP-Scan (if you have a WordPress-based site) can help pinpoint configuration errors, XSS and SQL injection bugs, and outdated server software to keep your customers’ data secure.

Whether you simply have a few outdated plugins, or you forgot to sanitize user input in a hardly used form, an automated check can be the quickest way to find security bugs before hackers take advantage of them.

Your site’s place in search results for any given search term is always changing. Therefore, it’s important to be notified if you suddenly slip off the first page of results for a specific query.

SERPWatcher and RankTrackr are services that check your site’s position in search results on a daily basis and send you a message when it suddenly changes. Additionally, both offer dashboards that display all the different keywords that lead to your site, and where your site has ranked for those searches over time.

Many of these services can also track interactions from social media sites and widgets, so you can completely understand how your users find your website.

Forgetting to renew your SSL certificate is just as bad as your site going down unexpectedly, but with the added consequence that many users may lose trust in your site’s security. Worse, not renewing your domain on time could allow someone to buy it and use it for something else entirely.

To avoid potential customers being turned away by “your connection is not secure” errors, set a calendar reminder and use a service like CertsMonitor to make absolutely certain that you renew on time. Many registrars and certificate merchants offer auto-renew, as well, so you can truly “set it and forget it”.

Did you forget to add a title to a page? Did you miss ALT attributes on a few images? No robots.txt? Search engines will drop your page’s position in the rankings if you don’t fix issues like these.

SEOptimer and RavenTools crawl your site and find every instance of SEO mistakes on every page. Implementing the suggestions from either tool can significantly boost your rankings on Google and other engines. Google itself also offers tools to identify issues and assess your site’s speed and mobile compatibility.

The PageRank algorithm deep within the heart of Google ranks sites based on the number and quality of links that point to them. The idea is that high-quality websites will be linked from many other well-ranking sites. When your website is linked from a reputable blog or goes viral on a social media platform, you’ll notice that your site is displayed more prominently in search results.

To get notified whenever you get linked from both good and bad sites, Monitor Backlinks will tell you when new links begin pointing to your site. It can also give insight into which websites would give you the most beneficial backlinks.

It’s easy to forget to monitor some vital sign on your website, leading to a significant loss of business. Therefore, using a service to address each of the areas that need to be monitored will allow you to focus on your business, instead of the boring tasks required to keep your website up and running.

From SEO concerns to security, uptime, and even link rot, you can count on these monitoring services to alert you when something goes wrong.

FTP, short for File Transfer Protocol, is an old standard for transferring files from one computer to another. The protocol was first proposed in 1971, long before the advent of the modern TCP/IP-based internet. In spite of its age, FTP is still commonly used, and hundreds of thousands of websites link to files stored on FTP servers (using URLs that start with ftp://).

Until recently, that wasn’t an issue. All major browsers had built-in support for FTP and were able to handle ftp:// links. This situation is changing. The developers of Chrome disabled FTP in version 88 (released on January 19, 2021), and it’s likely that other browsers will follow suit.

The rationale behind this decision is that FTP in its original form is an insecure protocol that doesn’t support encryption. This is understandable, but practically, it breaks existing ftp:// links for the majority of users.

If you want to make sure that your website is free of ftp:// links, follow the steps below.

Go to https://www.drlinkcheck.com/, enter the URL of your website, and press the Start Check button.

Wait until the check is complete and the website is fully crawled. The number of found ftp:// links is displayed in the Link Schemes section. If there are no ftp: items in the list, the crawler didn’t locate any ftp:// links on your site and you are all good and can skip the rest of the post.

Click the ftp: item under Link Schemes to get to the list of ftp:// links and review each item in the list. If you hover over a link and hit the Details button, you can see which pages contain the link (under Linked from). A click on Source will show you the exact location in the HTML source code.

Now it’s time to decide what to do with the found links. Here are a few options:

https:// URL you can link to.Forty years after its introduction, FTP is slowly being phased out as a protocol for serving files on the internet. With major browsers dropping support for FTP, now is a good time to clean up your website and get rid of all FTP links. You surely don’t want your website to appear outdated and broken.

Links are one of the most important factors in how search engines determine the ranking of a website. If you place a link from your website to another, search engines consider this a vote for the relevance and quality of the linked content. Just like a recommendation to friends or family in real life, a link is something that you put your good name behind.

If you don’t want to endorse a link, adding rel="nofollow" tells search engines to ignore it when ranking pages. This makes sense for sites that you want your visitors to warn about (like a scam or a hack), user-generated links that you haven’t reviewed yet (like in the comments section of a blog), or links in ads that you were paid for placing on your website.

Considering the importance of marking links as nofollow (or not), it’s a good idea to periodically check if all your outbound links are correctly qualified. With a small website of only a few pages, you may be able to do this by hand, but larger sites require an automated solution. This is where Dr. Link Check comes into play. Our service is not only a great broken link checker, but can also give you an inventory of all the links on your website.

Head to the Dr. Link Check homepage, enter the address of your website, and click on Start Check.

While crawling your website, Dr. Link Check displays the number of found Dofollow and Nofollow links under Dofollow/Nofollow on the Overview page. Click on Nofollow to list the found links.

As you are probably only interested in links pointing to other websites, limit the report to outbound links by clicking the Add button in the Filter bar on the top and selecting Direction from the menu.

The list of links might also contain links found in image (<img src=...>) or script (<script src=...>) tags. If you want to restrict the report to normal page links, click again on Add and select Link type from the filter menu.

With these two filters, you've now identified all outbound nofollow links on your website. If you want to see all dofollow links instead, simply click on Nofollow in the filter bar and select Dofollow from the menu.

If you have a paid subscription (Standard plan and above), you can create rules for which links to follow and which links to ignore:

While previously a rule could only be based on the URL of a link (example: Url ENDSWITH ".gif"), it’s now also possible to include or exclude links based on where they are found in the HTML code. As an example, the following exclude rule makes our crawler ignore all <a> tags found inside HTML elements that have class="footer":

HtmlElement = ".footer a"

If you have worked with CSS before, the syntax should already be familiar to you. The table below lists the supported ways of selecting HTML elements:

| Selector | Description |

|---|---|

.class |

Selects all elements that have the specified class |

#id |

Selects an element based on the value of its ID attribute |

element |

Selects all elements that have the specified tag name |

[attr] |

Selects all elements that have an attribute with the specified name |

[attr=value] |

Selects all elements that have an attribute with the specified name and value |

[attr~=value] |

Selects all elements that have an attribute with the specified name and a value containing the specified word (which is delimited by spaces) |

[attr|=value] |

Selects all elements that have an attribute with the specified name and a value equal to the specified string or prefixed with that string followed by a hyphen (-) |

[attr^=value] |

Selects all elements that have an attribute with the specified name and a value beginning with the specified string |

[attr$=value] |

Selects all elements that have an attribute with the specified name and a value ending with the specified string |

[attr*=value] |

Selects all elements that have an attribute with the specified name and a value containing the specified string |

* |

Selects all elements |

A B |

Selects all elements selected by B that are inside elements selected by A |

A > B |

Selects all elements selected by B where the parent is an element selected by A |

A ~ B |

Selects all elements selected by B that follow an element selected by A (with the same parent) |

A + B |

Selects all elements selected by B that immediately follow an element selected by A (with the same parent) |

A, B |

Selects all elements selected by A and B |

Equipped with this knowledge, it’s possible to construct quite powerful rules:

| Example | Matched HTML elements |

|---|---|

HtmlElement = "#search > a, #filter > a" |

<a> tags directly under elements with the IDs search or filter |

HtmlElement = "a[rel~=nofollow]" |

<a> tags with a rel="nofollow" attribute |

HtmlElement = "img[src$=.png]" |

<img> tags with an src attribute value ending in .png |

HtmlElement = ".comments *" |

Everything inside elements with a comments class |

HtmlElement = "head > link[rel=alternate][hreflang=en-us]" |

All <link rel="alternate" hreflang="en-us"> elements directly inside the <head> tag |

This feature has been in beta for a while and has proven to be quite a valuable addition. We hope you will find it as useful as we do. If you run into any problems or have a suggestion, please drop us a note.

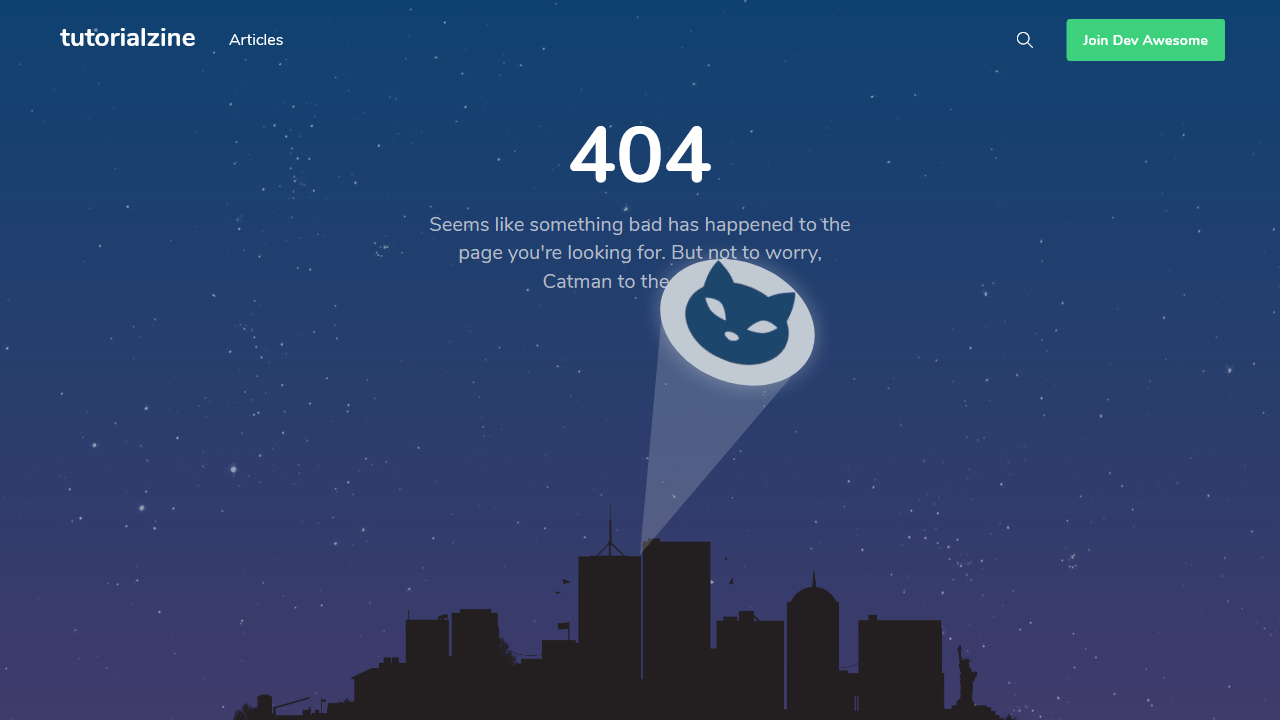

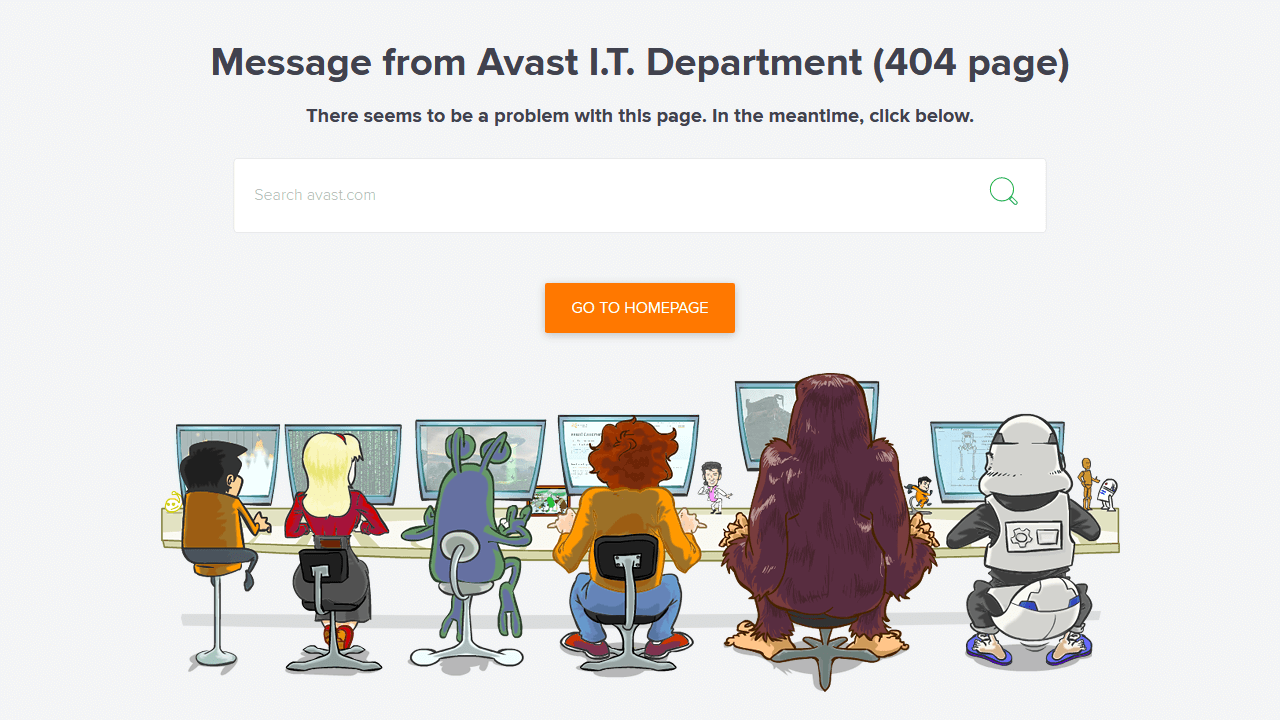

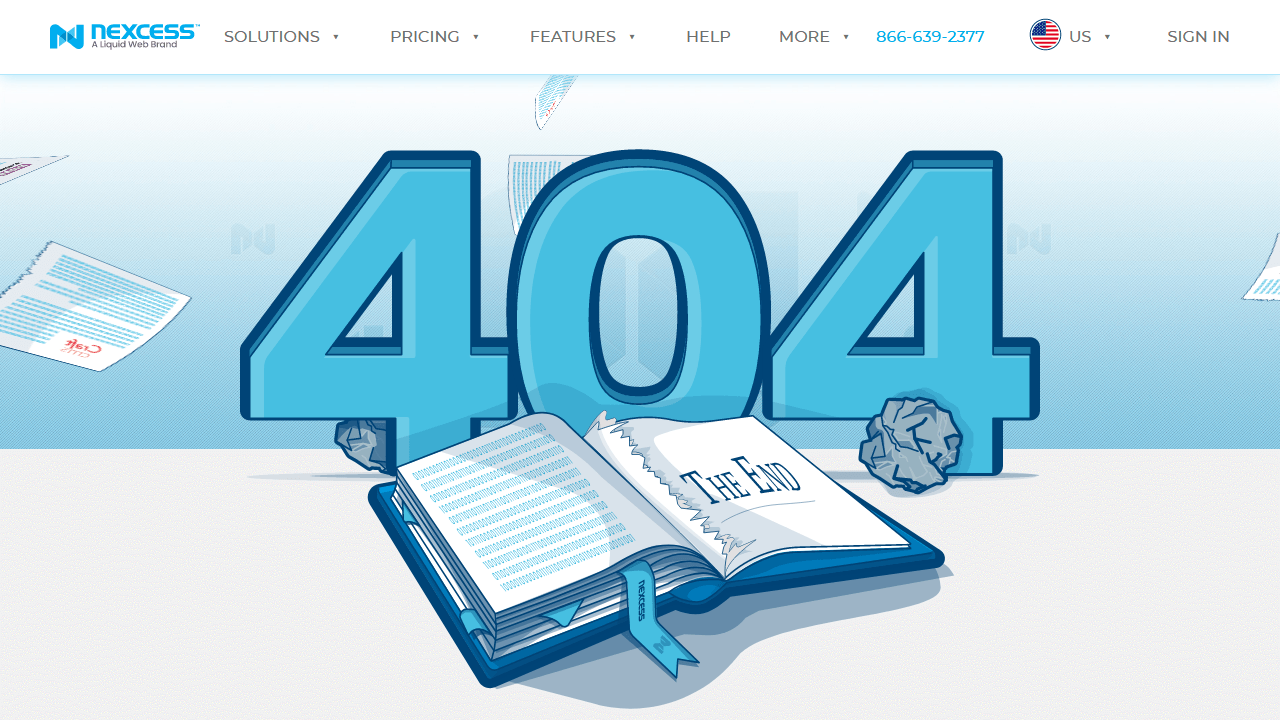

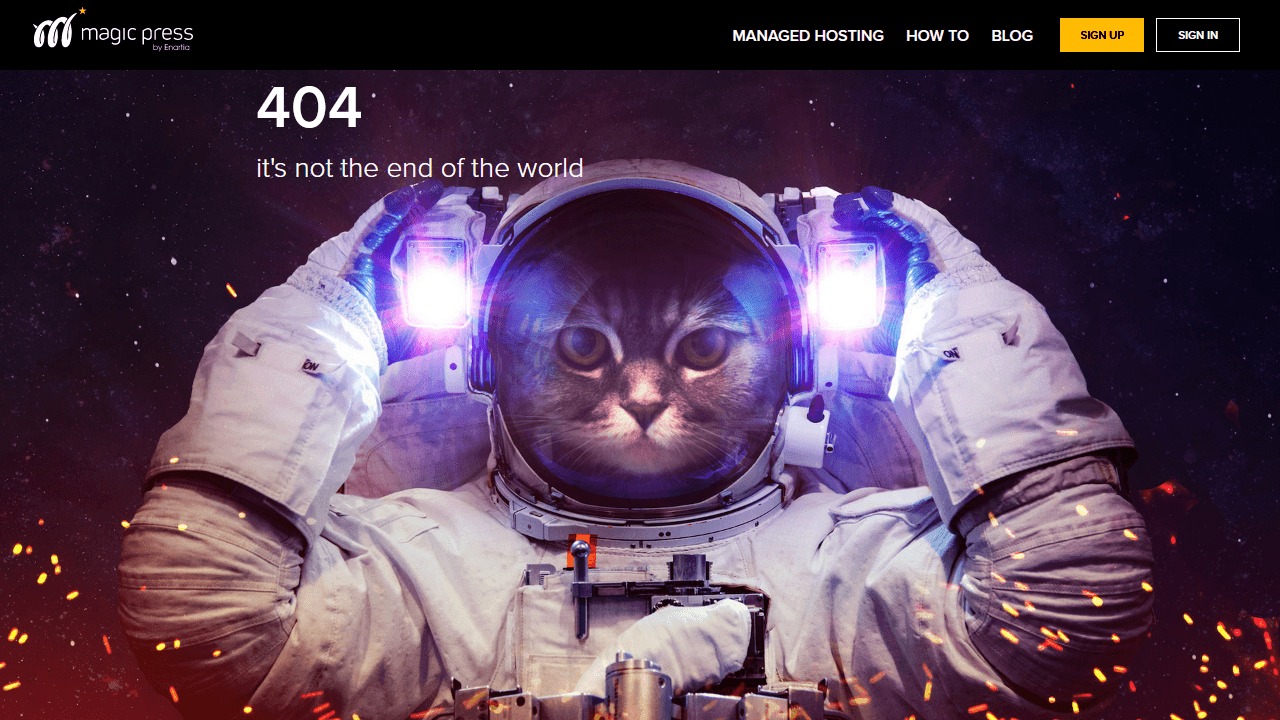

As the owner of Dr. Link Check, a broken link checker app, I have a love-hate relationship with 404 pages. On the one hand, I do everything I can to help our customers find and fix broken links and keep website visitors from running into 404 errors. On the other hand, I truly enjoy a unique and creative 404 page that sets a positive tone and lightens the mood.

While there are still too many websites that use the plain and dull default pages that come with their web servers, some custom 404 pages are gorgeous masterpieces. These hidden error pages are often the places where web designers can show their creativity and express themselves outside the bounds of what was specified by corporate.

For this article, I modified our crawler to search the web for the most beautiful 404 pages. The crawler went through a total of 500,000 websites, reducing the number of candidates to a few thousand, which I then manually narrowed down to the 40 pages presented below.

I hope these designs will give you the inspiration you’ll need to create your own distinctive 404 page. Crafting a pretty error page will not only be fun, but also makes sense from a customer conversion point of view. An appealing 404 page design can take away some of the bitterness of the broken link that a user just encountered. There’s a good chance the user will be pleasantly surprised and stay on your site rather than immediately hitting the back button and never visiting you again.

Now, enough with the chit-chat and on to why you’re actually here: the 404 pages.

Compiling this list of 404 pages was a fun thing for me to do. I hope you enjoyed the article and found some inspiration for your own website projects. If you already have a unique 404 page that you’re proud of, or have designed one based on this article, please message me via Twitter @wulfsoft and tell me about it. I’d love to see your creations.

A slow website isn’t just frustrating for potential customers – it harms your search rankings and makes your site look unprofessional. Decreasing the number of HTTP requests that browsers make while loading one of your pages is an easy way to speed everything up without splurging on expensive hosting. There are plenty of tools you can use to figure out what kinds of requests your site is making and minimize the ones that aren’t needed.

An HTTP request is simply the way that a piece of content (an image or a stylesheet, for example) makes its way from your server to your users’ devices. When you load a page in your browser and something like an image needs to be loaded, your browser will make a request to the server hosting that file. Lots of small requests can take a long time to load compared to one larger request, because one round trip across the network often takes longer than the actual download.

Before you can reduce the number of requests that your website makes, you'll need to figure out where they’re coming from and how long each one takes. Conveniently, all major desktop web browsers offer developer tools that include network profiling functionality. To open DevTools in Chrome or Firefox, press F12 (or Cmd+Opt+I on macOS). You might be greeted with a face full of HTML tags and CSS selectors, but don’t be alarmed. Click the “Network” tab to open the network profiling tools.

Try opening another page on your site: you should see a list of requests, along with fields for, at least, status, domain, size, and time. In the waterfall chart at the right, you can see when each HTTP request started and finished loading.

To get a better idea of what a new visitor would experience when loading your website for the first time, try force-reloading your website (Ctrl+Shift+R or Cmd+Opt+R). This will prevent your browser from loading certain elements from its cache and force it to make actual HTTP requests for them. You can tell which requests were cached by seeing which rows say “memory cache” (Chrome) or “cached” (Firefox) in the “Size” or “Transferred” columns.

If mucking around in the DevTools simply isn’t your thing, you can use online services like Pingdom Speed Test to get most of the same information.

Now that you’ve figured out how many and what kinds of HTTP requests your site is making, you can get to work reducing them.

While most frontend frameworks will automatically minify and combine your JavaScript files for you, there might still be full-size scripts, stylesheets, and extra HTML lying around on your site that can easily be made smaller and combined. If you don’t have too many files to minify, you can paste each one into one of many online minifiers (just try searching for “minify js” on DuckDuckGo – they even have a tool built into the search results). Otherwise, the Autoptimize WordPress plugin can take care of that automatically.

While minifying code is critical for decreasing the amount of data browsers have to load, HTTP/2 makes it unnecessary to put all of your scripts and stylesheets into one file. With this newer HTTP standard, browsers can load multiple files in one request. HTTP/2 is faster in lots of other ways as well, so if you haven’t gotten around to updating your web server you should do so soon.

Redirect chains are when your site redirects to another page that in turn redirects to somewhere else. Besides using three or more requests to get one file, redirect chains make it harder for search engines to crawl your site and will decrease your rankings. It can be somewhat difficult to diagnose this issue just looking at the request statuses in the “Network” tab in DevTools, so some automated tools have the ability to detect redirect chains across every page on your website.

Dr. Link Check is primarily designed to find dead links and links that redirect to parked domains, but it can also find all the redirects and redirect chains by crawling your entire site. After running your website through Dr. Link Check, click on “Permanent redirect” in the “Redirects” section of the results.

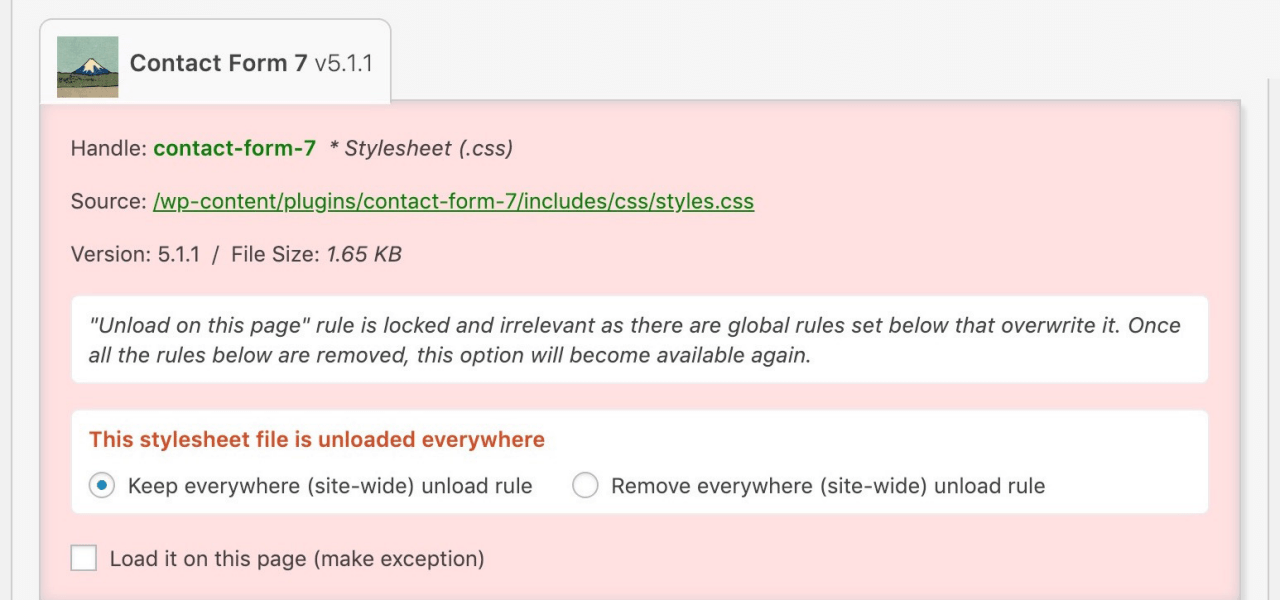

WordPress makes it easy to add plugins and scripts to extend your site’s functionality, but loading each of these assets on every single page makes for slow loading times and high server load. The Asset CleanUp plugin lets you select which exact assets (scripts and stylesheets) load on each page, so you aren’t loading the CSS or JavaScript for a specific part of one page on every other page as well.

For example, without Asset CleanUp, your site might be forcing users’ browsers to download the scripts for a Contact Us widget on every single page, even if that widget is only actually being used on a single page. As you might expect, all those extra HTTP requests add up to make your website slower.

Your site might not just be making requests to your own server: external fonts, social media widgets, ads, and external images all load from other servers and can slow your website down significantly. While a fancy font from Google Fonts might look great, it also means that your website must load an additional file from another place on the web. If you still want to keep the font, think about disabling weights and variants that you aren’t currently using. It’s also recommended to avoid using external images. Hosting them yourself means that you aren’t at the mercy of another provider, and they can load much faster.

If you have long pages with lots of images on your site, loading all the images at once, even ones that the user can’t currently see, can take a while and cost you a lot of bandwidth. Instead, try lazy-loading your images using plugins like Smush or Optimole. This way, images only load once the user scrolls down to them instead of all loading when the page is opened. These plugins will also compress your images appropriately to make them load faster without compromising quality.

WordPress is not a static site generator, so whenever a user loads a page, the PHP process has to render that page just for that particular visitor. As you might expect, rendering the same exact page hundreds of different times is not very efficient. Plugins like LiteSpeed Cache and WP Fastest Cache allow WordPress to serve a page from cache instead of rendering it for each individual visitor, saving lots of time.

CDNs (content delivery networks) are services that let you offload some HTTP requests (usually images and other assets) to fast servers located near your users. These services usually also perform some caching themselves, decreasing the number of HTTP requests made to your origin server. By configuring your site to proxy through a CDN like Fastly or KeyCDN, big assets will be served by the CDN’s servers instead of yours.

A common cause of a slow website is an abundance of unnecessary HTTP requests. It’s easy to find out where requests are coming from with the developer tools built into your browser. Given that each request takes a certain amount of time to be returned, you can make your site much faster by reducing the total number of requests, reducing redirect chains and external requests, lazy-loading images, and caching content on your server and on a CDN. Even if you have already taken care of most of the low-hanging fruit, it’s good to occasionally review the requests your site is making whenever it feels slower than usual.