Many users hit the back button within seconds of loading a web page, so it’s no surprise that lots of websites are primarily designed to look nice. Humans are inherently visual creatures, but that doesn’t mean that users ignore poor design just because it looks pretty. An ugly website that works great might turn away most visitors, but a pretty website that doesn’t work well won’t keep anyone around. To create a successful website, it’s critical to not just make it look good, but also ensure that it’s easy to use, fast, secure, and in compliance with every applicable law.

Users probably landed on your website for a reason. Maybe they were interested in purchasing your product; maybe they needed to call your customer support. Regardless, your website should make it easy for them to find what they’re looking for. Navigation should be intuitive, clear, and efficient so that visitors don’t get frustrated and leave. If you want to encourage visitors to do something (like sign up for your service), include a clear call to action on the relevant pages.

Your site has to work well on every device a potential customer might be using. Mobile-friendliness is a basic expectation in today’s world. Accessibility and internationalization aren’t nearly as hard as they sound, and they make a huge difference for users who aren’t from your country and those with disabilities.

Professionalism is also a big part of usability. Not proofreading content is a surefire way to lose anyone who reads carefully. Broken links are a more subtle issue, but they can tarnish your reputation and users’ trust when a link goes somewhere unexpected. Periodically clicking every single link on your website is a waste of time, so services like Dr. Link Check make it easy to avoid giving visitors an unwelcome surprise.

Even the most easy-to-use website won’t attract any customers if it isn’t ranked highly by search engines. Following basic SEO guidelines will significantly boost the number of hits your site receives. Simple tweaks like making sure to implement <meta> tags on every page, using expressive <title> elements, and putting descriptive text in alt attributes on images makes it much easier for search engines to index and rank your pages.

Instead of manually adjusting the HTML on every page, your CMS or static site generator should offer some configuration options to automate these fixes. Additionally, a machine-readable XML sitemap is an easy automated addition that will help crawlers find every page you host on your site.

People are impatient when it comes to waiting around for web pages to load. Google found that 53% of visitors left when a page took more than three seconds to load. Making sure your website is fast (especially on mobile devices and connections) is an important and relatively easy way to significantly reduce the number of people who hit the back button before seeing any content.

Assets like images, JavaScript, and CSS files are some of the largest files your visitors will have to load from your site. In the case of images, be sure to downsize and compress them appropriately. For scripts and stylesheets, don’t forget to minify them. If page load times are still too high, try using a CDN, which will cache these large files near your users. Also, a frequently forgotten source of slow load times, if you’re using a CMS like WordPress, is unnecessary plugins. Aside from resulting in longer render times, plugins can sometimes inject additional JavaScript and CSS that your users have to download.

Not using HTTPS is like publicly advertising that you don’t wear a seatbelt: it’s risky, it looks bad, and it doesn’t give you any benefits. Search engines will rank HTTP-only pages lower than sites that allow secure connections. More importantly, you’re needlessly risking the security of your users’ data and most likely violating regulations like the GDPR.

If your site has any kind of login functionality, be sure to treat user data carefully. Use strong hashing functions to prevent attackers from stealing passwords if your server is compromised. Regardless of what your site does, follow security best practices on your server: use strong passwords, update server software frequently (and plugins, if applicable), use a correctly-configured firewall, and limit remote access over SSH and similar protocols.

It might be easy to forget about legal and compliance issues when your business is just getting off the ground, but a single violation could cost you a ton of time and money. Be sure to write a privacy policy that complies with regulations like the GDPR and CCPA for customers in the EU and California, and store user data appropriately. It’s far cheaper to get a lawyer involved while writing a privacy policy than it is to defend yourself from a lawsuit. As much as they are a nuisance, cookie consent messages are a requirement in many locations and are relatively easy to implement. Last but not least, be sure to adequately credit photographers and other content producers to avoid copyright hassles in the future.

Not every lead comes from a Google search. Social media marketing is especially important today, and it’s used by most successful sites to attract customers. Regardless of where it’s shared, good content marketing is also an important tool in your toolbox. Instead of just promoting your service, content marketing like articles and videos also offer something of value to viewers. After learning something useful, they will be interested in checking out your product. You can use A/B testing, where different visitors will be given different content, to gauge the effectiveness of your marketing or any other element of your website.

Sometimes, however, you do need to resort to direct advertising. Marketing tools like Google AdWords let you put your ads right where your most interested customers are already looking.

An unreliable or broken site is certainly a source of frustration for visitors. Too many site owners fail to implement a good backup policy, aren’t immediately notified when their site goes down, and don’t keep everything up-to-date and secure. Cloud providers like AWS and GCP offer easy ways to save snapshots of your servers, preventing you from losing everything if something goes haywire. Try a service like UptimeRobot to get a message as soon as your site goes down. You don’t want to find out that your site doesn’t work when customers start calling you.

As much as a good visual design is an important part of creating a successful website, everything under the skin is equally important. Keeping your site easy to find and use, fast and secure, and in compliance with regulations is less obvious than a pretty façade, but these things are just as important if you want to retain your customers and attract new ones.

These days, most website owners are keenly aware of the important role that high quality content plays in getting noticed by Google. To that end, businesses and digital marketers are spending increasingly large amounts of time and resources to ensure that websites are spotted by search engine robots and therefore found by their target audiences.

But while every website owner wants high search engine rankings and the corresponding increases in traffic, there are certain areas of a site that are best hidden from the search engine crawlers completely.

You might wonder why it’s good to keep crawlers from indexing parts of your website. In short, it can actually help your overall rankings. If you’ve spent lots of time, money and energy crafting high quality content for your audience, you need to make sure that search engine crawlers understand that your blog posts and main pages are much more important than the more “functional” areas of your website.

Here are a few examples of web pages that you might want the search robots to ignore:

As you can see, there are plenty of instances where you should be actively dissuading search engines from listing certain areas of your site. Hiding these pages helps to ensure that your homepage and cornerstone content gets the attention it deserves.

So how can you instruct search engine robots to turn a blind eye to certain pages of your website? The answer lies in noindex, nofollow and disallow. These instructions allow you define exactly how you want your website to be crawled by search engines.

Let’s dive right in and find out how they work.

As you can probably imagine, adding a noindex instruction to a web page tells a search engine to “not index” that particular area of your site. The web page will still be visible if a user clicks a link to the page or types its URL directly into a browser, but it will never appear in a Google search, even if it contains keywords that users are searching for.

The noindex instruction is typically placed in the <head> section of the page’s HTML code as a meta tag:

<meta name="robots" content="noindex">

It’s also possible to change the meta tag so that only specific search engines ignore the page. For example, if you only want to hide the page from Google, allowing Bing and other search engines to list the page, you’d alter the code in the following way:

<meta name="googlebot" content="noindex">

A bit more difficult to configure and therefore less often used is delivering the noindex instruction as part of the server’s HTTP response headers:

HTTP/2.0 200 OK

…

X-Robots-Tag: noindex

These days, most people build sites using a content management system like WordPress, which means you won’t have to fiddle around with complicated HTML code to add a noindex instruction to a page. The easiest way to add noindex is by downloading an SEO plugin such as All in One SEO or the ever-popular alternative from Yoast. These plugins allow you to apply noindex to a page by simply ticking a checkbox.

Adding a nofollow instruction to a web page doesn’t stop search engines from indexing it, but it tells them that you don’t want to endorse anything linked from that page. For example, if you are the owner of a large, high authority website and you add the nofollow instruction to a page containing a list of recommended products, the companies you have linked to won’t gain any authority (or rank increase) from being listed on your site.

Even if you’re the owner of a smaller website, nofollow can still be useful:

Even if your pages only contain internal links to other areas of your website, it can be useful to include a nofollow instruction to help search engines understand the importance and hierarchy of the pages within your site. For example, every page of your site might contain a link to your “Contact” page. While that page is super important and you’d like Google to index it, you might not want the search engine to place more weight on that page than other areas of your site, just because so many of your other pages link to it.

Adding a nofollow instruction works in exactly the same way as adding the noindex instruction introduced earlier, and can be done by altering the page’s HTML <head> section:

<meta name="robots" content="nofollow">

If you only want certain links on a page to be tagged as nofollow, you can add rel="nofollow" attributes to the links’ HTML tags:

<a href="https://www.example.com/" rel="nofollow">example link</a>

WordPress website owners can also use the aforementioned All in One SEO or Yoast plugins to mark the links on a page as nofollow.

The last of the instructions we are discussing in this blog post is “disallow.” You might be thinking that this sounds a lot like noindex, but while the two are very similar, there are slight differences:

As you can see, disallowing a page means that you’re telling the search engine robots not to crawl it all, which signifies that it has no use at all for SEO. Disallow is best used for the pages on your site that are completely irrelevant to most search users, such as client login areas or “thank you” pages.

Unlike noindex and nofollow, the disallow instruction isn’t included into a page’s HTML code or HTTP response, but instead is included in a separate file named “robots.txt.”

A robots.txt file is a simple plain text file that can be created with any basic text editor and sits at the root of your site (www.example.com/robots.txt). Your site doesn’t need a robots.txt for search engines to crawl it, but you will need one if you want to use the disallow directive to block access to certain pages. To do that, you’ll simply list the relevant parts of your site on the robots.txt file like this:

User-agent: *

Disallow: /path/to/your/page.html

WordPress website owners can use the All in One SEO plugin to quickly generate their own robots.txt file without the need to access the content management system’s underlying file structure directly.

Do you know for certain which parts of your website are marked as noindex and nofollow or are excluded from being indexed by a disallow rule? If you are not sure, you might consider taking an inventory and reviewing your past decisions.

One way to do such an inventory is to go to www.drlinkcheck.com, enter the URL of your site’s homepage, and hit the Start Check button.

Dr. Link Check’s primary function is to reveal broken links, but the service also provides detailed information on working links.

After the crawl of your site is complete, switch to the All Links report and create a filter to only show page links tagged as noindex:

You now have a custom report that shows you the pages that contain a noindex tag or have a noindex X-Robots-Tag HTTP header.

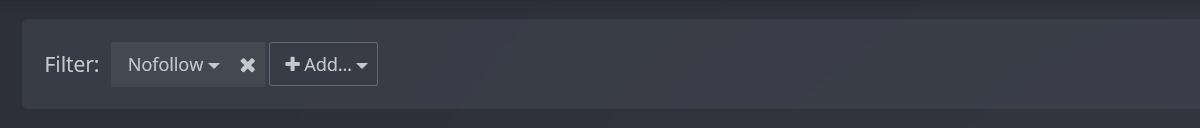

If you want see all links that are marked as nofollow, switch to the All links report, click on Add… in the filter bar, and select Nofollow/Dofollow from the drop-down menu.

By default, the Dr. Link Check crawler ignores all links disallowed by the rules found in the site’s robots.txt file. You can change that in the project settings:

Now switch the Overview report and hit the Rerun check button to start a new crawl with the updated settings.

After the crawl has finished, open the All Links, click on Add… in the filter section, and select robots.txt status to limit the list to links disallowed by your website’s robots.txt file.

While the vast majority of website owners are far more interested in getting the search engines to notice the pages of their websites, the noindex, nofollow and disallow instructions are powerful tools to help crawlers better understand a site’s content, and they indicate which sections of the site should be hidden from search engine users.

When building a website, it’s important to consider how easy it is for visitors to navigate to subpages from the homepage. The homepage, of course, will likely generate the most traffic. If visitors can’t easily navigate to lower-level pages from there, your website’s performance will suffer. You can help visitors access relevant subpages by improving your website’s click depth.

Also known as page depth, click depth refers to the total number of internal links, starting from the homepage, visitors must click through to access a given page on the same website. Each click adds another level of click depth to the respective page. The more links a visitor must click through to access a page, the higher the page’s click depth will be.

Your website’s homepage has a click depth level of zero. Any subpages linked directly from the homepage have a click depth level of one, meaning visitors must click a single internal link to access them from the homepage.

Click depth is a metric that affects user experience and, therefore, search rankings. Visitors typically want to access subpages easily, with as few links as possible. If a subpage requires a half-dozen or more clicks to access from the homepage, visitors may abandon your website in favor of a competitor’s site.

Google has confirmed that it uses click depth as a ranking signal. John Mueller, Senior Webmaster Trends Analyst at Google, talked about the impact of click depth during a Q&A session. According to Mueller, subpages with a low click depth are considered more important by Google than those with a high click depth. When visitors can access a subpage in just a few clicks from the homepage, it tells Google that the subpage is highly relevant. As a result, Google will give the subpage greater weight in the search engine results pages (SERPs).

An easy way to determine if a site suffers from high click depth is to run a crawl with Dr. Link Check. Even though Dr. Link Check’s primary function is to find broken links, the service can also be used for filtering links based on their depth:

Now you have a list of all internal page links with a click depth higher than five.

If the crawl revealed pages with a high click depth, it’s time to decide what to do about it. Here are five tips that will help you create a strategy for improving your site’s link structure.

The hierarchy of your website’s navigation menu will affect your site’s average click depth. If you use a broad hierarchy with just a few top-level categories and many lower-level categories, you can expect a higher average click depth. With this type of navigation, visitors must click through multiple category levels to access lower-level subpages, resulting in a higher average click depth.

Using a narrow hierarchy for your website’s navigation menu, on the other hand, promotes a lower average click depth. With a narrow hierarchy, your website’s navigation menu will have more top-level categories and fewer lower-level categories, which should allow visitors to access subpages in fewer clicks.

When creating articles, guides, blog posts or other content for your website, include internal links to relevant subpages. Without internal links embedded in content, visitors will have to rely on your website’s navigation menu to locate subpages. Internal links in content offer a faster way for visitors to find and access subpages, which helps keep your website from suffering with a high average click depth.

Keep in mind that internal links are most effective at improving click depth when published on subpages with a low click depth. You can add internal links to all your website’s subpages, but those published on subpages with a click depth level of one to three are most beneficial because they are close to the homepage.

Another way to improve your website’s click depth is to use breadcrumbs for supplemental navigation. What are breadcrumbs? In the context of web development, the term “breadcrumbs” refers to links in a user-friendly navigation system that shows visitors the depth of a subpage’s location in relation to the homepage. An e-commerce website, for instance, may use the following breadcrumbs on the product page for a pair of men’s jeans: Homepage > Men’s Apparel > Jeans > Product Page. Visitors to the product page can click the breadcrumb links to go up one or more levels.

Breadcrumbs shouldn’t be as a substitute for your website’s navigation menu. Rather, you should use them as a supplemental form of navigation. Add breadcrumbs to each subpage to show visitors where they are currently located on your website in relation to the homepage. You can add breadcrumbs manually, or if your website is built on WordPress, you can use a plugin to add them automatically. Yoast SEO and Breadcrumb NavXT are two popular plugins that feature breadcrumbs. Once they are activated, you can configure either of these plugins to automatically integrate breadcrumbs into your website’s pages and posts.

You can also use a visitor sitemap to lower your website’s average click depth. Not to be confused with search engine sitemaps, visitor sitemaps live up to their name by targeting visitors. Like search engine sitemaps, they contain links to all of a website’s pages, including the homepage and all subpages. The difference is that visitor sitemaps feature a user-friendly HTML format, whereas search engine sitemaps feature a user-unfriendly XML format.

After creating a visitor sitemap, create a site-wide link to somewhere in your website’s template, such as the footer. Once published, the visitor sitemap will instantly lower the click depth of most or all of your website’s subpages.

While optimizing your website for a lower average click depth can improve its performance, you shouldn’t overdo it. Linking to all your website’s subpages directly from the homepage won’t work. Depending on the type of website you operate, as well as its age, your site may have hundreds or even thousands of subpages. Linking to each one creates a messy and cluttered homepage without any sense of structure.

A high average click depth sends the message that your website’s subpages aren’t important. At the same time, it fosters a negative user experience by forcing visitors to click through an excessive number of internal links. The good news is you can lower your website’s average click depth by using a narrow hierarchy for the navigation menu, including internal links in content, using breadcrumbs and creating a visitor sitemap. These strategies will help you improve your site rankings as well as improve your visitors’ experience.

Links are the very foundation of the web. They connect web resources with each other and make it possible for visitors to navigate between pages and allow pages to reference images and other content.

Unfortunately, unlike diamonds, links are not forever. They have a tendency to break over time. Companies go out of business, servers are shut down, blog posts get deleted, domains expire… the web is dynamic, and there are lots of reasons why a link that works today might stop working tomorrow.

At best, a broken link is merely annoying and results in a poor user experience. At worst, it can pose a security threat to anyone visiting the website.

Imagine what could happen if Google shuts down their Analytics service and later lets the google-analytics.com domain expire. There would be millions of websites left with obsolete script code that attempts to load and run code from https://www.google-analytics.com/analytics.js. A third-party could snatch up the expired domain and serve malicious JavaScript code under this URL. This is one form of an attack called Broken Link Hijacking.

Broken Link Hijacking is an exploit in which an attacker gains control over the target of a broken link.

Typical candidates for link hijacking include:

Depending on how the hijacked link is embedded into the website’s code, there are different ways to exploit the vulnerability, with varying levels of risks.

If you have embedded an external script into your website (using code like this: <script src="https://example.com/script.js"></script>) and the link’s domain name gets taken over, an attacker can inject arbitrary code into the site.

You might ask what harm could come from some extra JavaScript code. The answer is plenty. Here are a few examples of how an attacker could exploit this vulnerability:

The possibility to execute attacker-supplied code basically makes this a Stored Cross-Site Scripting (XSS) vulnerability, which Bugcrowd classifies as a P2 (high risk) issue.

A hijacked link to an image (<img src="https://example.com/image.jpg">) or style sheet (<link href="https://example.com/styles.css" rel="stylesheet">) is not as bad as a hijacked script link, but can still have serious security implications:

background: url("https://example.net/hacked.gif")) and to inject text (body::before { content: "HACKED!" }).Attacks like these are often referred to as defacement or content spoofing and typically fall into Bugcrowd’s P4 (low risk) category.

It’s also worth noting that each request made to an attacker-controlled external server leaks information about both the website and the visitor. The attacker is able to track who visits the site (IP address, browser user-agent, referring website) and how often.

When you link to an external page from your site (<a href="https://example.com/">Link</a>), this link can be seen as a recommendation. You are indicating that the content of the page is relevant and worth a visit, otherwise you wouldn’t have included the link as part of your own content.

Gaining access to the target of the link allows an attacker to exploit the trust that your visitors give you and your recommendation in order to:

This is basically an impersonation attack. The attacker pretends that the linked website is legitimate and from a trusted source. Bugcrowd rates Impersonation via Broken Link Hijacking as P4 (low risk).

Subresource Integrity (SRI) allows you to ensure that linked scripts and style sheets are only loaded if they haven’t changed since the page was published. This is accomplished by computing a cryptographic hash of the content and adding it to the <script> or <link> element via the integrity attribute (as a base64-encoded string):

<script src="https://example.com/script.js" integrity="sha384-/u6/iE9tq+bsqNaONz1r5IjNql63ZOiVKQM2/+n/lpaG8qnTYumou93257LhRV8t" crossorigin="anonymous"><script>

Before executing a script or applying a style sheet, the browser compares the requested resource to the expected integrity hash value and discards it if the hashes don’t match.

By adding a Content-Security-Policy HTTP header to your server’s responses, you can restrict which domains resources can be loaded from:

Content-Security-Policy: default-src 'self' example.net *.example.org

In this example, resources (such as scripts, style sheets, images, etc.) may only be requested from the site’s own origin (self, excluding subdomains), example.net (excluding subdomains) and example.org (including subdomains). Requests to other origins are blocked by the browser.

A Content Security Policy doesn’t help when one of your trusted domains gets hijacked, but it does make sure that you don’t accidently embed resources from unexpected sources, whether that’s due to a simple typo or an obsolete link on an old and long-forgotten page.

Broken links happen. And when they do, it’s always better to know sooner than later, before an attacker might exploit the issue. Our link checker, Dr. Link Check, allows you to schedule regular scans of your website and notifies you of new link problems by email. Our crawler not only looks for typical issues like 404s, timeouts, and server errors, but also checks if links lead to parked domains.

Quite often, redirects are an early indicator that a link might break soon. When a website is redesigned and restructured, redirects are used to map the old URL structure to the new one. This typically works fine for the first redesign, but with each new restructure, the redirect chains get longer and longer, with more potential breaking points. It’s therefore advisable to keep a close eye on redirected links and update them if necessary.

In order to identify redirects on your website, run a scan in Dr. Link Check and click on one of the items in the Redirects section of the Overview report to see the details.

A broken external link doesn’t just disrupt the visitor experience; it can also have serious security implications. An attacker might be able to hijack the broken link and gain control over the link’s target. In the worst case, this can lead to an account takeover and the theft of sensitive data.

Using modern browser security features like Subresource Integrity and Content Security Policy you can mitigate these risks. Regular crawls with a broken link checker help you identify broken links early and reduce the attack surface.

Finishing the initial version of your site is only the first step on your journey as a website owner. Now that you have a website, tracking its vital statistics is crucial for success.

It’s easy to overlook the trivial things that negatively impact your website’s professionalism, security, Google rankings, and ultimately the revenue you make from it.

Luckily, there are a variety of tools and services that take the grunt work out of managing a website. When you maintain these eight essential elements of any successful website, your site’s odds of being successful will increase tremendously.

If your website is suddenly getting a lot more (or fewer) visitors, then it’s good to know when it started happening and why so that you can either capitalize on its newfound popularity or refresh your SEO strategy. It’s also useful to see which devices your users browse your site with, which sites they came from, and where they’re located.

User analytics packages (e.g., Google Analytics or Cloudflare Web Analytics) allow you to track a variety of statistics about the people who use your website, and what they do on it. Google Analytics can even send you an email alert if certain conditions are met (e.g., a sudden spike in traffic).

If you’re getting a lot of hits to your homepage but only a few purchases, then you can also see how much time users are spending on your site, and how many of them make it to each step in the process of buying something.

Links you make to other sites could suddenly stop working at any time if a website that you linked to is revamped, or a domain is sold to someone else. As bad as 404 errors are for your professionalism, the worst situation is when a website changes hands and redirects to something like a phishing site, or a parked domain full of ads.

To avoid having to manually check every link all the time, Dr. Link Check makes sure that all the links on your entire site (including images and external stylesheets) load correctly, have valid SSL certificates, aren’t on a blacklist of malware and phishing sites, and haven’t been parked.

After crawling every link across your whole website, Dr. Link Check prepares a searchable report and lets you download the data as a CSV file to do your own analytics.

A website can’t be successful if it’s down, so services like Uptime Robot and Pingdom check your website’s status every few minutes to make sure it hasn’t encountered an outage. As soon as it does, these services will alert you via an SMS message, email, or various other contact methods, so you can get it working again as quickly as possible.

Uptime Robot can also check protocols other than HTTP/S and generate status pages. Pingdom includes a full performance monitoring and analytics solution, as well. Each will load your site from multiple locations to determine if an outage is only affecting people in a certain geographical area.

Nothing erodes user trust and confidence quite like a security breach, so it’s of the utmost importance to avoid them entirely. Even if you follow good development practices and keep all your software up to date, it’s still possible to mess up somewhere, leaving an opportunity for a hacker to sabotage your business.

While automated tools aren’t a perfect substitute for a professional security audit, tools including Website Vulnerability Scanner, Mozilla Observatory, and WP-Scan (if you have a WordPress-based site) can help pinpoint configuration errors, XSS and SQL injection bugs, and outdated server software to keep your customers’ data secure.

Whether you simply have a few outdated plugins, or you forgot to sanitize user input in a hardly used form, an automated check can be the quickest way to find security bugs before hackers take advantage of them.

Your site’s place in search results for any given search term is always changing. Therefore, it’s important to be notified if you suddenly slip off the first page of results for a specific query.

SERPWatcher and RankTrackr are services that check your site’s position in search results on a daily basis and send you a message when it suddenly changes. Additionally, both offer dashboards that display all the different keywords that lead to your site, and where your site has ranked for those searches over time.

Many of these services can also track interactions from social media sites and widgets, so you can completely understand how your users find your website.

Forgetting to renew your SSL certificate is just as bad as your site going down unexpectedly, but with the added consequence that many users may lose trust in your site’s security. Worse, not renewing your domain on time could allow someone to buy it and use it for something else entirely.

To avoid potential customers being turned away by “your connection is not secure” errors, set a calendar reminder and use a service like CertsMonitor to make absolutely certain that you renew on time. Many registrars and certificate merchants offer auto-renew, as well, so you can truly “set it and forget it”.

Did you forget to add a title to a page? Did you miss ALT attributes on a few images? No robots.txt? Search engines will drop your page’s position in the rankings if you don’t fix issues like these.

SEOptimer and RavenTools crawl your site and find every instance of SEO mistakes on every page. Implementing the suggestions from either tool can significantly boost your rankings on Google and other engines. Google itself also offers tools to identify issues and assess your site’s speed and mobile compatibility.

The PageRank algorithm deep within the heart of Google ranks sites based on the number and quality of links that point to them. The idea is that high-quality websites will be linked from many other well-ranking sites. When your website is linked from a reputable blog or goes viral on a social media platform, you’ll notice that your site is displayed more prominently in search results.

To get notified whenever you get linked from both good and bad sites, Monitor Backlinks will tell you when new links begin pointing to your site. It can also give insight into which websites would give you the most beneficial backlinks.

It’s easy to forget to monitor some vital sign on your website, leading to a significant loss of business. Therefore, using a service to address each of the areas that need to be monitored will allow you to focus on your business, instead of the boring tasks required to keep your website up and running.

From SEO concerns to security, uptime, and even link rot, you can count on these monitoring services to alert you when something goes wrong.

FTP, short for File Transfer Protocol, is an old standard for transferring files from one computer to another. The protocol was first proposed in 1971, long before the advent of the modern TCP/IP-based internet. In spite of its age, FTP is still commonly used, and hundreds of thousands of websites link to files stored on FTP servers (using URLs that start with ftp://).

Until recently, that wasn’t an issue. All major browsers had built-in support for FTP and were able to handle ftp:// links. This situation is changing. The developers of Chrome disabled FTP in version 88 (released on January 19, 2021), and it’s likely that other browsers will follow suit.

The rationale behind this decision is that FTP in its original form is an insecure protocol that doesn’t support encryption. This is understandable, but practically, it breaks existing ftp:// links for the majority of users.

If you want to make sure that your website is free of ftp:// links, follow the steps below.

Go to https://www.drlinkcheck.com/, enter the URL of your website, and press the Start Check button.

Wait until the check is complete and the website is fully crawled. The number of found ftp:// links is displayed in the Link Schemes section. If there are no ftp: items in the list, the crawler didn’t locate any ftp:// links on your site and you are all good and can skip the rest of the post.

Click the ftp: item under Link Schemes to get to the list of ftp:// links and review each item in the list. If you hover over a link and hit the Details button, you can see which pages contain the link (under Linked from). A click on Source will show you the exact location in the HTML source code.

Now it’s time to decide what to do with the found links. Here are a few options:

https:// URL you can link to.Forty years after its introduction, FTP is slowly being phased out as a protocol for serving files on the internet. With major browsers dropping support for FTP, now is a good time to clean up your website and get rid of all FTP links. You surely don’t want your website to appear outdated and broken.