Dr. Link Check finds links in HTML documents (supporting HTML tags like <a>, <area>, <frame>, <iframe>, <img>, <script>, <audio>, <video>, and several more) and CSS files (supporting @import and url(...)). The crawler isn’t able to execute JavaScript code or search for links in JavaScript-generated pages.

Supported URL schemes include http, https, data, and mailto. Links with http and https schemes are checked by connecting to the server and requesting the resource; data URLs are checked for syntax errors; and mailto links are verified as having a valid domain with MX records.

The number of active projects you can have depends on which plan you are on (5 for the Standard plan, 10 for the Professional plan, etc.), but it’s always possible to delete existing projects and make room for new ones. This way you can check as many websites as you like.

The only limitation is that there can only be one running check at a time. If you start a new check while another one is still in progress, the check is queued and launched later.

Sometimes problems are just temporary – maybe the target server was overloaded at the time of the check, or there was a hiccup somewhere in the network. Issues that often resolve themselves over time include: “Timeout,” “Connect error,” “Send/receive error,” and HTTP 5xx server errors.

Other times, web servers block or limit requests from our servers. For instance, linkedin.com servers deny all requests originating from the Amazon cloud (where our servers are located) with a “999 Request Denied” response. Many servers also have a rate-limiting mechanism in place that blocks requests or slows down responses after a certain number of hits. In these cases, you will typically see 429 (Too Many Requests) or sometimes 403 (Forbidden) and 503 (Service Unavailable) HTTP error codes.

We have also seen servers return an error status code in the HTTP header but still deliver normal-looking content in the body. This typically indicates a configuration issue with the web server or content management system.

When our crawler doesn’t crawl the entire site, it’s typically for one of the following reasons:

We sometimes see websites that generate a seemingly never-ending number of new links – a problem known as a crawler trap. A typical example is an online store with faceted navigation that lets visitors filter products by category, price, brand, color, and other criteria. When each filter combination gets its own URL, this easily results in hundreds of thousands, if not millions, of different links. Another example is a calendar that allows visitors to navigate infinitely into the future, generating a new URL for each page.

One way to spot issues like this is to open the All Links report and click on Last page to get to the last found links on the site. If you see a series of similar URLs that differ only in the query string or a path segment, it might be a sign that the site is suffering from a crawler trap.

Once you have identified the problematic URLs, you can exclude them from being crawled in one of the following ways:

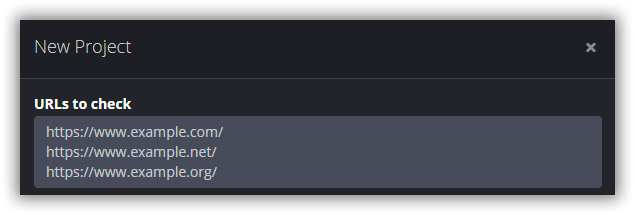

Disallow: /search. Our crawler respects this rule, unless you have the Ignore robots.txt option activated.rel="nofollow" attribute to the link elements in the site’s HTML code.Url STARTSWITH "https://example.com/search".When creating a new project, you can enter up to 10,000 URLs into the URL(s) to check field:

Our crawler supports login forms and several other authentication methods (HTTP Basic, HTTP Digest, Bearer token), but this functionality is not yet available through the user interface. If you are on the Professional plan or higher, reach out to us and we will be happy to manually set up a project for you.

By default, the crawler limits parallel downloads from a single host to a maximum of four, and does not exceed eight requests per second. This is less than what is generally allowed by modern web browsers, which typically allow up to six (Chrome, Firefox) or more (Internet Explorer) connections per host.

If you see errors that you believe result from too-frequent requests, contact us and we will adjust the crawling speed for your project.

Our crawler doesn’t run the JavaScript tracking code and therefore has no impact on your analytics.

It’s not possible to limit the check to only outgoing links. The crawler needs to collect and check the internal links first to be able to find the ones pointing to external websites.

If your intention is to reduce the number of found links and you are only interested in “normal” hyperlinks (like <a href="page.html">Link</a>), you can exclude other links by entering the following ignore rule under Project Settings → Advanced Settings → Ignore links if…:

HtmlElement != "a"

If you want to exclude links from being checked, you need to add an ignore rule to your project (under Project Settings → Advanced Settings → Ignore links if…).

The following rule will exclude image URLs based on their file extension:

Path ENDSWITH ".jpg" OR Path ENDSWITH ".png" OR Path ENDSWITH ".gif" OR Path ENDSWITH ".svg" OR Path ENDSWITH ".webp"

Although we recommend that you sign up long-term and set up a recurring monthly or weekly check for your site, it’s also possible to use Dr. Link Check for ad-hoc checks and keep the subscription for only a single month.

If you already know that you won’t be needing the subscription next month, simply go to Account → Subscription Settings and click the Cancel Subscription button. After cancellation, you will still be able to use the service until the period you have already paid for ends, and you won’t be billed again after that.

We don’t currently offer payment by purchase order or invoice. To keep things simple and automated, prepayment via credit card or PayPal is required for all subscriptions.

Links to the invoices are included in your monthly/yearly renewal emails. You can also find them under Account → Subscription Settings → Billing history.

We bill per 10,000 links. If, for example, your subscription allows you to check websites with up 20,000 links, your invoice will say “Quantity: 2”.

You are probably not logged into your account, but instead are using a temporary account with a free Lite subscription that was automatically created when starting a new check via our home page.

Please select Account → Logout and log in using your email address and account password.

Your can cancel your subscription via Account → Subscription Settings → Cancel Subscription.

You can contact us at any time with any questions you have. We are glad to help!